The modern way of building a startup is hypotheses-driven. It works like this:

Define the key assumptions that must be true for your idea to succeed,

Validate each assumption

The first step is not easy but is straightforward. If you do it right, you’ll end up with a long list of assumptions, which causes another problem: what should you actually validate, and where should you start?

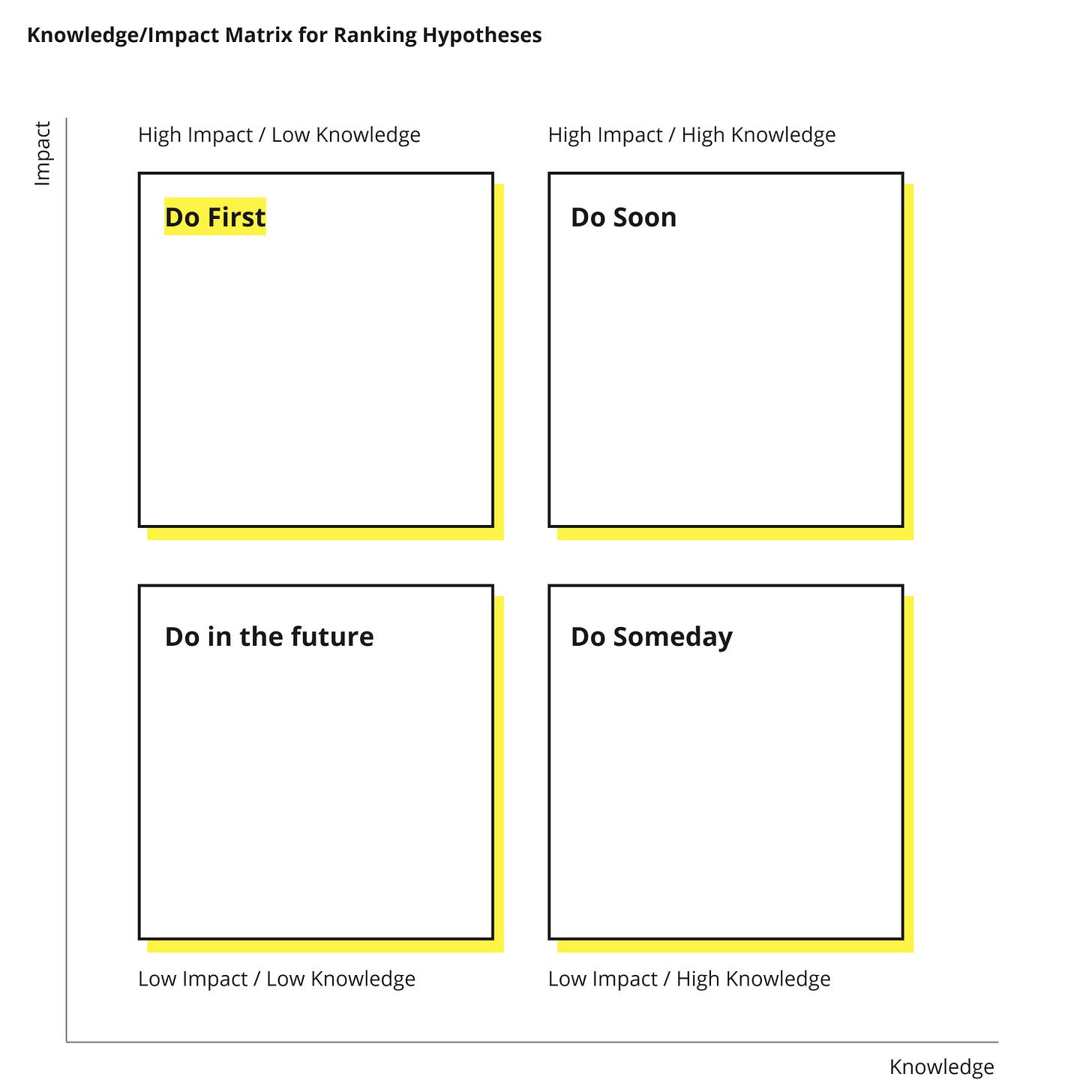

The Knowledge/Impact Matrix

Even though all your assumptions look important, they are not equal. One way to rank them is by using a knowledge/impact matrix. It works like this:

Take each hypothesis and assess it on two dimensions:

Impact on the success of your business

The knowledge you have about the assumption

Your assessment may look something like this:

Now that you have the rating of each hypothesis, you can map it on the knowledge/impact matrix:

The hypotheses that fall in the High Impact/Low Knowledge quadrant are the ones you start with. They are the ones with the highest risk for your business. Validating them first will determine what you end up building next.

Diving Deeper: The BabyWatch Example

To see this process in action, let’s walk through an example.

BabyWatch is a fictitious startup. It’s a SaaS that pairs babysitters with families for short-term engagements. Think of it as Uber for babysitters.

During a brainstorming session, the team identified the following hypotheses:

Note: In real life, these brainstorming boards look a lot messier. I made this one up for illustration purposes only.

Assessing the hypotheses

The team grouped the hypotheses into categories and chose to start with the riskiest one: the assumptions about the parents.

For each hypothesis, they came up with the following scoring:

When assessing for impact, the team asked, “How critical will it be if we got this wrong?”

Generally, anything related to defining and solving the core problems of the users has a high impact. If we turn out to be wrong about the need of users for trustworthy babysitters, then BabyWatch is dead in the water.

In terms of knowledge, the team asked this: “what factual knowledge do we already have about each hypothesis”?

There is a big trap here.

Teams often think they know their users when they only have strong hunches and opinions. The BabyWatch team may assume that, obviously, it’s hard for parents to find babysitters. Two of the founders are parents. They should know. Besides, they have friends who are parents too. They swap stories about how hard it is to find reliable babysitters.

In UX Research, this kind of navel-gazing is called self-referential research. If I have this problem, then many others must have the same problem. Often, this is not the case.

In reality, the experience of the parent-founders may not be reflective of BabyWatch’s target market at all. Proper research may reveal that:

The affluent segment of the market doesn’t have any issue with finding a babysitter. Many have live-in nannies. They can afford the service but they don’t need it.

The mid-level segment is in the opposite camp: they need the service but can’t afford it.

Self-referential research aside, your team and stakeholders may have tons of valuable internal knowledge, e.g. industry experience or market research. Before going out in the wild collecting new data, it’s worth assessing what you already have in-house.

But why focus on knowledge so much? Because…

Lack of knowledge = uncertainty.

Your job as a business owner is to minimize uncertainty. Startups are high-risk ventures because they are drowning in uncertainty. The more you can ascertain (to a reasonable degree) about your venture, the better decisions you’ll be able to make about the future. This includes decisions like not wasting your time and money building the next SHUC (video).

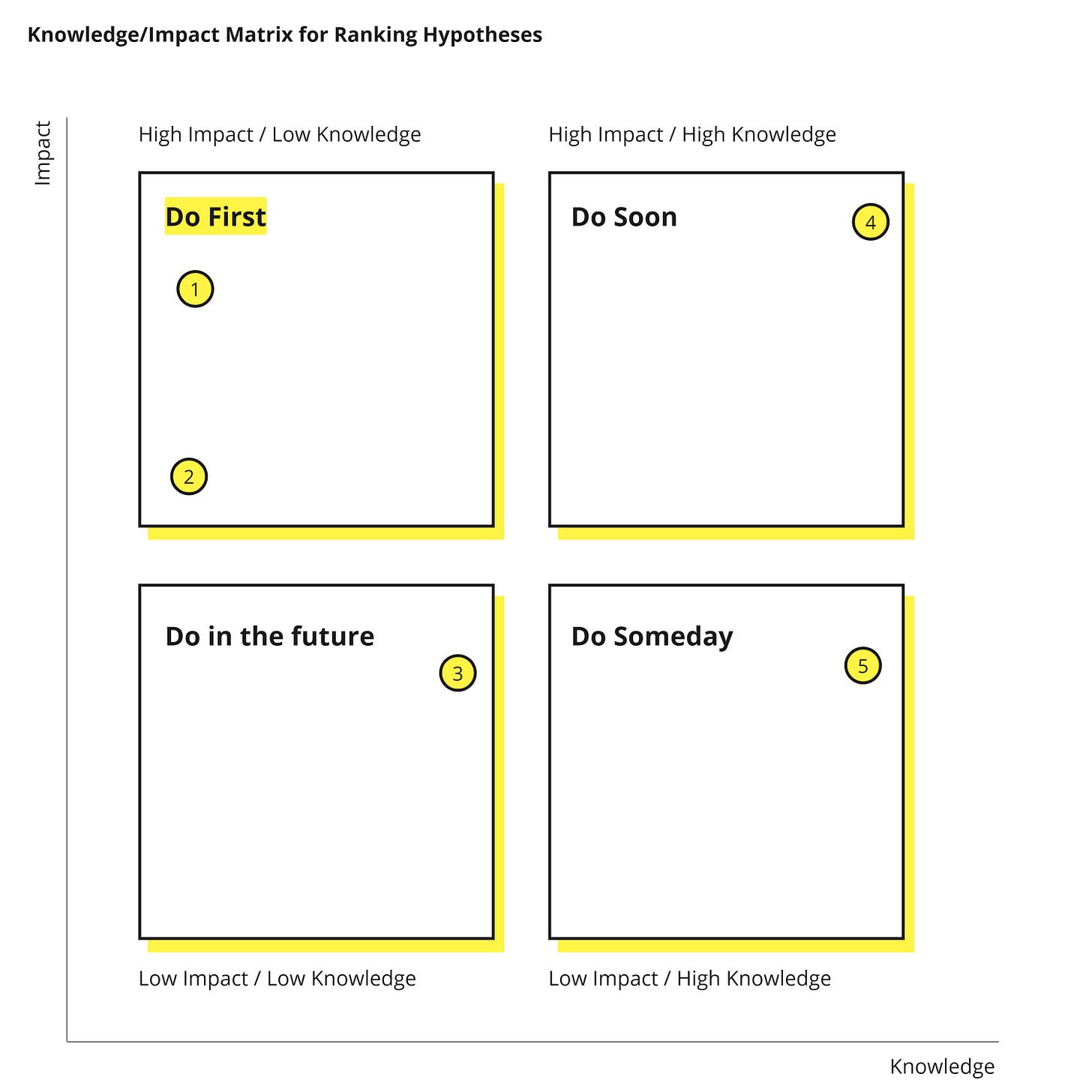

Mapping the hypotheses on a matrix

Once the team assessed each hypothesis, they placed it on the impact/knowledge matrix:

Based on this analysis, the team determined that they should start their research by validating the first hypothesis: parents urgently need trustworthy babysitters. Prioritizing the second assumption (how much parents will pay) was also a good option.

If This is All Too Fuzzy for You

Many of my developer friends get bothered by the subjective nature of mapping the hypotheses. They want something precise and, ideally, quantified. If you’re like them, consider this:

Precision won’t bring much value here. Whether assumption 1 is a touch higher than assumption 2 makes no difference. The goal here is to determine where to start your validation.

For each hypothesis, you can assign scores for impact and knowledge (1-10). Assign numbers to each axis in the matrix and plot each hypothesis.

In my experience, this extra effort usually doesn’t change the results. If it gives you comfort, however, give it a try.